Another survey arrived this week, this time in the mail. It was the City of Boulder 2011 Community Survey. It started out:

“Dear Boulder Resident,

[…] To get a representative sample of people living in Boulder, this questionnaire should be completed by the adult (anyone 18 years or older) in your household who most recently had a birthday.

Please have this person take a few minutes to answer all the questions and return the survey in the postage-paid envelope […].”

Hmmm. A few minutes? Are they kidding? The survey had 170 questions and 8,500 words on it. Spending just 6 seconds on each question (and how important it is to the City of Boulder) means the survey would take more than 15 minutes. Thoughtfully considering each question could take hours.

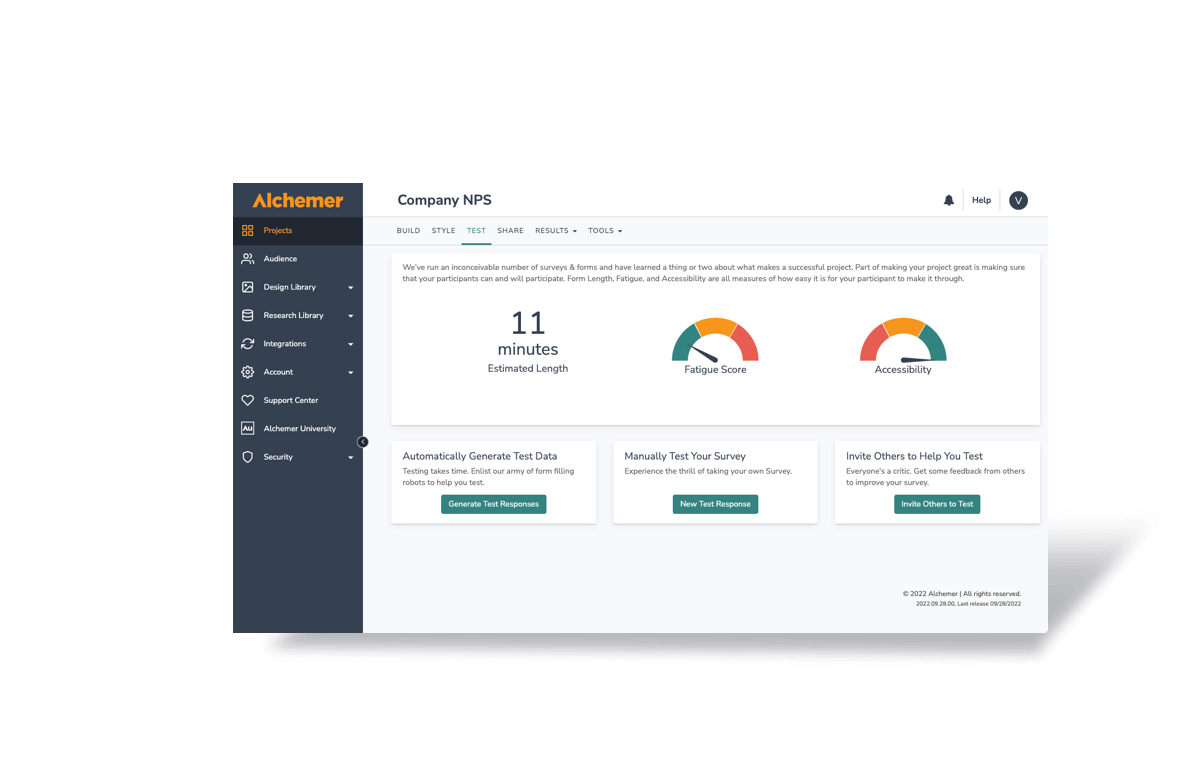

A survey like this undoubtedly creates “survey fatigue,” but that alone is probably not its biggest problem.

The biggest problem with this survey is that the authors made a number of mistakes when putting it together.

Here’s the list of the most common mistakes survey authors make from my previous blog entries:

- Having little or no understanding of the target audience

- Providing multiple choice lists that are too restrictive

- Requiring answers to all questions (online surveys only)

- Asking too many open-ended questions

- Using ranking questions incorrectly, or overusing them

- Asking unnecessary questions

- Asking too many questions

- Asking two questions in one

- Making questions too general

- Putting too little thought and planning into writing the survey, period

The survey above has been useful in helping me refine the list of common mistakes (which supports the old adage that nothing is ever a complete failure, as it can often serve admirably as a bad survey example). To wit, I have refined the list (refinements shown in red below):

10 Common Mistakes Made When Writing Surveys

- Having little or no understanding of the target audience and what information they will be able to provide

- Providing multiple choice lists that are too restrictive

- Requiring answers to all questions (online surveys only)

- Asking too many open-ended questions (or asking open-ended questions that are not useful)

- Using ranking questions incorrectly, or overusing them

- Asking unnecessary questions or ones that won’t produce usable information

- Asking too many questionsand/or including disjointed laundry-list questions

- Asking two questions in one

- Making questions too general

- Putting too little knowledgeable thought and planning into writing the survey, period

Below are some examples from the survey that support this refinement of my list of mistakes.

First, an example of the type of open-ended question that is not useful:

What do you think should be the top three priorities of the Boulder City Council in 2012?

This type of question will never lead to gathering useful information, except by accident.

The reason for this is the respondent has not been given any context within which to answer the question, such as: What’s on the City’s docket? What kinds of things can and will the Council address? Are there budget constraints? It is not fair to respondents to require them to guess at context.

This type of question is what gives open-ended questions a bad name.

Give your respondents a break, literally. At least break the list up into smaller pieces. A more thoughtful way to present the question would be to group items into topic areas.

In summary, the main mistakes in the City of Boulder 2011 Community Survey were:

- 4. Asking too many open-ended questions (or asking open-ended questions that are not useful)

- 7. Asking too many questions and/or including disjointed laundry-list questions.

- 10. Putting too little knowledgeable thought and planning into writing the survey, period

Mistakes 4 and 7 were the result of survey mistake #10: the authors did not put enough knowledgeable thought and planning into writing the survey.

As a result, the data collected by this survey will not necessarily provide the information they want. There is really no way to know exactly how survey fatigue has affected the data collected. A survey of this length will certainly exclude a significant portion of the target audience.

I will address the issue of appropriate survey length in my next blog post. Happy Survey-Taking!