In a recent teleconference titled “The Key to Effective Surveys,” insightful discussions emerged, shedding light on critical aspects of survey design. Here’s a breakdown of the key questions addressed to streamline your survey creation process:

Q1. Are customers less likely to respond to an online survey if they know we’ll know who they are by their email address?

A1: While confidentiality concerns may arise, they typically pose minimal issues, especially for surveys with low sensitivity. Online survey tools ensure secure data handling, maintaining participant anonymity when necessary.

Q2. How do you decide when to keep a survey blind or non-blind? Of course, a customer satisfaction survey would be by definition a non-blind survey. But what about some of the more attitudinal/behavioral, competitive and brand – equity related surveys?

A2: Blind surveys withhold the surveyor’s identity, while non-blind ones reveal it. Use non-blind surveys to leverage existing relationships and enhance response rates, especially in brand-related inquiries.

For example, if you are Nike and you want to know how you compare to another company (you want unbiased, honest feedback about something you offer in comparison to something offered by another company). You are trying to leverage the relationship the respondents have with you and you want them to feel that connection.

Q3. A follow–up question to the blind vs. non-blind — how does this affect response rate? Does it help the response rate if not blind? By blind, I mean … not disclosing who is conducting/sponsoring the survey.

A3: Response rates hinge on participant-company rapport, bolstered by non-blind surveys fostering stronger connections.

Q4. Is it better to have few open-ended questions?

A4: While open-ended queries offer broad insights, they often yield diverse responses, complicating data analysis. Survey platforms recommend balancing open-ended questions with structured ones for optimal results.

In general 60% of respondents don’t answer open ended questions and of the 40% that do, 90% of those give different answers. You get too much data and not enough information.

Q5. What is a good response rate (%) for web surveys sent via email?

A5: Response rate depends on the connection the target audience has with the company conducting the survey. For instance, if the audience consists of customers, employees, or community members, a 50% response rate is good.

Q6. What are some examples of effective wording to help the audience be more definitive in their decision response instead of answering the middle of the road to “play it safe”?

A6: While wording nuances exist, forcing definitive responses risks biasing data. Survey software encourages neutral options to capture nuanced opinions accurately.

Q7. Any feelings on time of day to send online survey and day of the week?

A7: B2B surveys thrive mid-week, while B2C surveys perform best during leisure hours, ensuring maximum engagement.

Q8. When we do focus groups, we like to end with a written survey to help gather the same information in a quantitative way. Do you think that this is a good thing or a bad thing given that they have already been asked all of the questions in the group?

A8: Post-group surveys complement focus group data by capturing individual perspectives often overshadowed by group dynamics.

Q9. What is the best survey tool on the market?

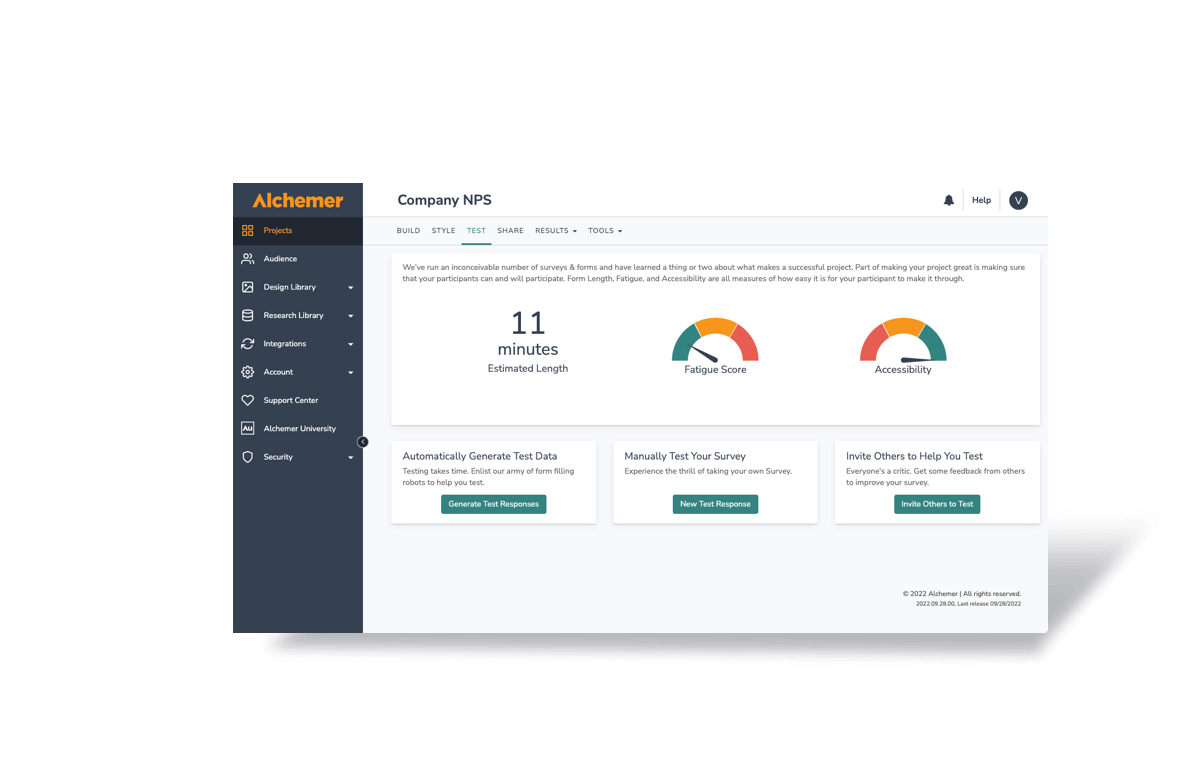

A9: Alchemer stands out for its comprehensive features and exceptional service.

Q10. When’s the ideal time to send event follow-up surveys?

A10: Promptly after the event, maximizing participant recall while ensuring thoughtful responses.

Q11. What is the best survey method/design to capture Price Sensitivity amongst consumers?

IE – How do we get accurate information in regards to how much a consumer is willing to pay (we see examples of consumers saying they will spend but in reality they don’t).

A11:

Conjoint analysis in surveys helps to gather accurate insights. It simulates trade-offs between price and product features, similar to real-life situations. This method allows researchers to understand how consumers make decisions. By analyzing these trade-offs, businesses can make informed decisions on pricing and product development.

You can use these insights in your survey strategy. The key to effective surveys is to utilize the latest survey software. This will help you collect comprehensive data. It will also assist in making informed decisions.